REST APIs are the backbone of modern distributed systems. Whether powering mobile apps, web frontends, or microservices, their performance directly impacts latency, throughput, scalability, and overall system reliability.

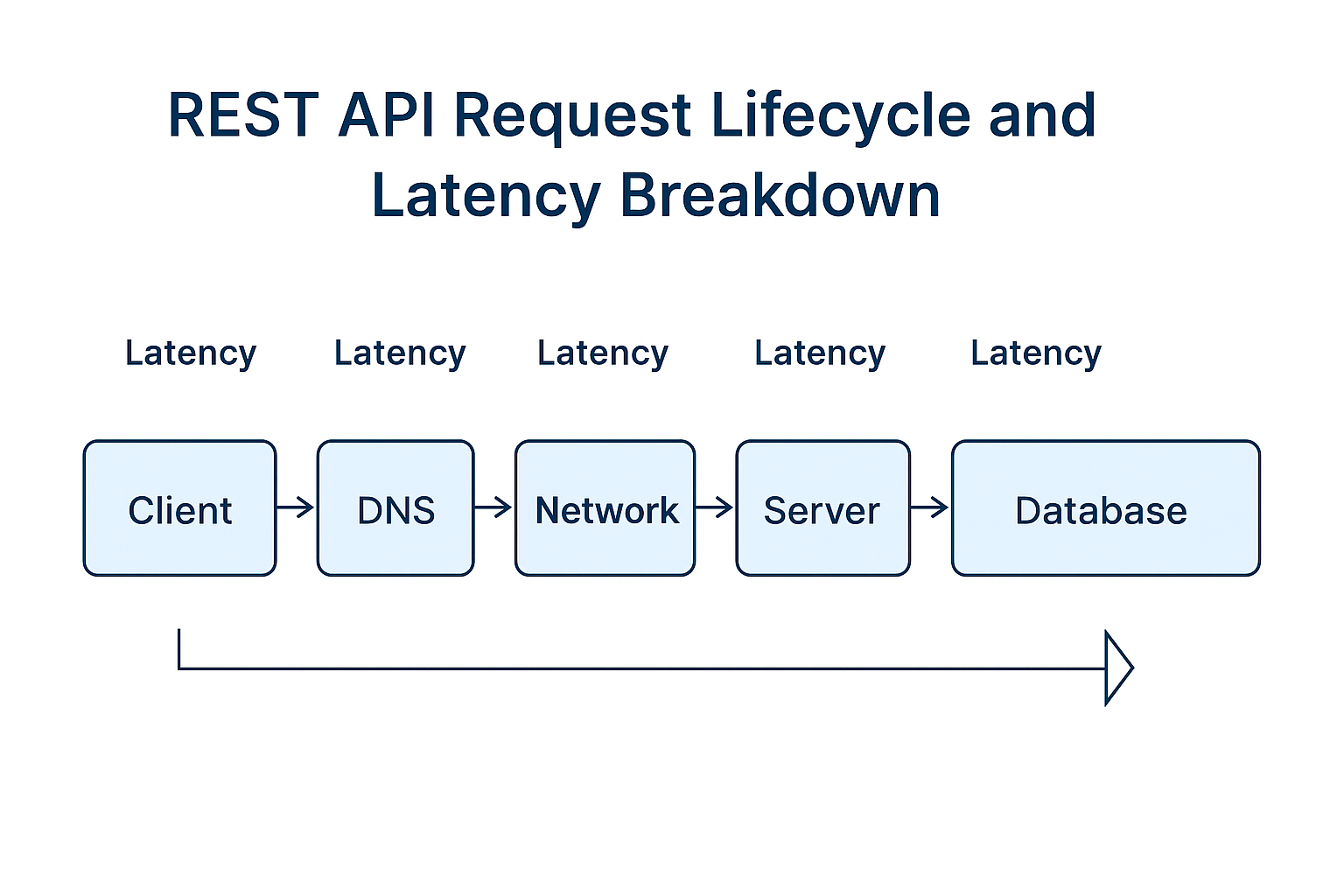

REST API performance optimization requires analyzing every layer in the request lifecycle—client, network, server, application logic, and data access—to reduce bottlenecks and improve p95/p99 tail latency.

This guide explains where performance issues typically occur and how to make APIs fast, resilient, and cost-efficient.

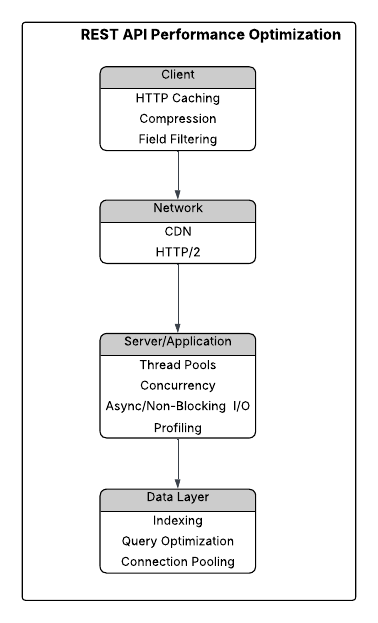

REST API performance optimization is not a single technique. It is the process of understanding and improving every layer in the request–response lifecycle:

Client → Network → Server/Application → Data Layer

This guide breaks down each layer, explores common bottlenecks, and provides proven techniques used in production-grade systems.

How to Think About REST API Performance

REST API performance optimization focuses on reducing latency, improving throughput, and scaling APIs efficiently by tuning client, network, server, and data layers. Effective REST API performance optimization requires balancing latency, throughput, and scalability across client, network, server, and data layers.

REST API performance is heavily influenced by how requests are routed and managed inside the framework. To understand this in depth, see Spring Boot and Spring Framework internals, which explains servlet mapping, DispatcherServlet flow, and application context behavior.

Most REST API bottlenecks arise from network round trips, serialization overhead, thread pool saturation, and database query latency.

Understanding end-to-end latency distribution, including TLS handshakes, HTTP/2 multiplexing, queue wait time, and DB response time, is essential. Optimizing only one layer rarely moves the needle — the biggest gains come from holistic, multi-layer optimization.

Client → Network → Load Balancer → Web Server → App Logic → Cache/DB → Back

Optimizing only one layer rarely produces meaningful gains. Instead, think of performance as a set of stacked constraints where:

- Network latency limits responsiveness

- CPU and concurrency limit throughput

- Database performance often limits overall scalability

- API design determines how many requests are needed

This article goes through each layer, explaining why bottlenecks occur and how to fix them with practical, repeatable techniques.

Client & Network Optimization (Reducing Data Transfer)

Network latency often dominates total response time, especially in high-latency or mobile environments. Network latency is often the biggest hidden cost in API performance. Even perfectly optimized backend code feels slow if the network path is inefficient.

Reduce DNS, Connection Setup, and Round Trips

Every API call incurs unavoidable overhead:

- DNS lookup

- TCP handshake

- TLS handshake

- HTTP negotiation

On slow networks, these can add 50–300 ms before any app logic executes.

Optimizations:

- Use persistent connections (

Keep-Alive) to reduce handshake latency - Enable TLS 1.3 for faster negotiation

- Minimize round trips by aggregating requests

- Apply client-side caching to avoid redundant network calls

Enable HTTP/2 and Response Compression

HTTP/2 provides:

- Multiplexed streams (no head-of-line blocking)

- Header compression

- Better throughput under load

Compression (gzip or Brotli) can reduce JSON payload size by 70–90%, lowering bandwidth usage and improving TTFB.

Caching at the Edge and Client Layer

Caching drastically reduces server load and improves response time consistency.

Use:

Cache-ControlETagLast-Modified- CDN caching (Cloudflare, Fastly)

These reduce both latency and infrastructure cost, especially for frequently accessed resources.

REST vs gRPC — When Performance Matters

REST is ideal for public APIs, but internal microservices often benefit from binary serialization, Protocol Buffers, and HTTP/2 multiplexing used in gRPC.

REST (JSON over HTTP/1.1):

- Human-readable

- Larger payloads

- Slower encoding/decoding

- Limited streaming

gRPC (Protocol Buffers over HTTP/2):

- Faster serialization/deserialization

- Smaller payload size

- Client/server streaming

- Improved bandwidth efficiency

When to use REST:

- Public APIs

- Browser clients

- Human-readable, loosely structured data

When to use gRPC:

- Microservice-to-microservice calls

- High throughput internal systems

- Real-time streaming

Switching internal API calls from REST to gRPC frequently delivers 30–80% latency reduction and substantial improvement in throughput.

Server & Application Layer Optimization

Once traffic (request) reaches your server, the critical factors become:

- CPU utilization (Processing cost)

- thread pool configuration

- Serialization overhead (serialization/deserialization cost)

- blocking vs non-blocking I/O

- async job offloading

- error handling and retry logic

Optimize Concurrency, Thread Pools, and Resource Usage

Creating a new thread per request does not scale. Use a thread pool sized to CPU and workload. Thread pools directly influence tail latency and request throughput.

If you’re implementing this in Java, see my practical deep-dive on configuring and tuning thread pools with

ExecutorServiceandThreadPoolExecutor: ExecutorService & Thread Pools in Java — A Practical Guide.

Best practices:

- For CPU-bound tasks → pool size ≈ number of cores

- For I/O-bound tasks → larger pool (threads often block on I/O)

- Use

ExecutorServiceorThreadPoolExecutor - Avoid excessive context switching

- Monitor queue depth to detect thread starvation

Improper sizing causes queueing delays, timeouts, and poor p99 latency during traffic spikes.

Poor thread pool sizing leads to:

- long queue wait times

- high tail latency

- dropped requests under load

Move Slow Work to Background Processing

REST endpoints should return quickly. Offload heavy operations to async systems.

Use:

- message queues (Kafka, SQS, RabbitMQ)

- event-driven architectures

- worker pools

- asynchronous I/O

This improves response time, system resiliency, and load distribution during peak traffic.

Reduce Serialization/Deserialization Overhead

JSON serialization is CPU-heavy. Improve performance by:

Optimizations:

- Use efficient JSON libraries (Jackson → faster alternatives like GSON, JSON-B)

- Exclude unnecessary fields

- Replace large nested objects with simplified structures

- Cache pre-built views if possible

- Switching to binary formats for internal services

Serialization is a hidden contributor to both CPU usage and tail latency.

Rate Limiting and Circuit Breakers

Protect services from overload using:

- token buckets

- sliding window rate limiting

- circuit breakers (Resilience4j, Hystrix)

- bulkheads

- retries with exponential backoff

These patterns improve fault tolerance, stability, and availability under stress.

API Design Optimization

API shape influences the number of requests, payload size, client workflow, and round-trip latency.

Break Up Chatty APIs and Reduce Sequential Calls

Chatty APIs require clients to make multiple back-and-forth requests.

Fix with:

- aggregated endpoints

?include=or?expand=patterns- batch operations

- server-driven pagination

Reducing N sequential calls into one aggregated response dramatically reduces network overhead and latency.

Reduce Payload Size and Unnecessary Fields

Large payloads waste both:

- network bandwidth

- JSON parsing time

Optimize with:

- field filtering (

?fields=id,name) - pagination (

limit,cursor) - partial responses

- compression (gzip, Brotli)

Small payloads improve throughput and end-user latency.

Smaller payloads = faster APIs.

Data Layer Optimization (Database & Storage)

In real systems, most slow APIs are slow because the database is slow.

Avoid N+1 Queries and Reduce Over-Fetching

N+1 happens when the API fetches one record, then queries again for each related record which is unnecessary round trips.

Fix with:

- joins

- batching

- ORM fetch strategies

- preloading with

INqueries

This reduces query count, DB load, and p99 response time.

Use Proper Indexing and Query Shaping

Indexes improve read performance but slow writes. Tune based on workload.

Use:

- covering indexes

- composite indexes

- optimized query plans

- avoid full table scans

- limit wildcards and regex queries

- limiting full table scans

Tools like EXPLAIN, query plans, and slow query logs help identify issues.

Good indexing is one of the most impactful database optimization strategies.

Tune Connection Pools and Reuse Connections

Creating database connections is expensive.

Use:

- HikariCP for Java (industry standard)

- properly sized pools (small but sufficient pool size)

- idle connection eviction (connection reuse)

- timeouts (timeout configuration)

Correct pool sizing avoids thread blocking, deadlocks, and DB overload. Incorrect pool sizing is a top-3 cause of production slowness.

Observability, Monitoring, and Performance Testing

You cannot optimize what you cannot measure.

Use:

- APM tools (New Relic, Datadog, Grafana, OpenTelemetry)

- metrics: p95, p99 latency, RPS, error rates

- distributed tracing

- load testing (k6, Gatling, JMeter)

Regular performance testing prevents regressions and ensures API SLAs remain healthy.

Checklist: REST API Performance Optimization

- Tune thread pools and queueing behavior (Java examples: ExecutorService & Thread Pools in Java — A Practical Guide

Quick Wins

- Enable HTTP/2

- Apply compression

- Reduce payload size

- Cache at CDN and edge

Medium Complexity

- Optimize thread pools

- Add async workflows

- Tune connection pools

- Reduce DB queries

Long-Term Improvements

- Redesign chatty APIs

- Introduce gRPC internally

- Adopt event-driven architectures

- Improve database schema and indexing

Want a deeper Java-specific guide on thread pools?

REST API performance often comes down to queueing, concurrency limits, and correctly sized executors. If you’re building APIs in Java, this guide walks through practical configuration and best practices.

Read: ExecutorService & Thread Pools in Java — A Practical Guide →Conclusion

REST API performance optimization requires improving latency, throughput, concurrency, and data access across every layer of the system. By tuning network behavior, server concurrency, API design, and database access, you can build APIs that are fast, scalable, resilient, and future-proof.

Hands-On Examples on GitHub

This article explains the concepts and tradeoffs behind REST API performance optimization. For practical, runnable examples, check out the GitHub repository below.

FAQ — REST API Performance Optimization

How do I reduce REST API latency?

You can reduce REST API latency by minimizing network round trips, enabling HTTP/2, applying compression, caching responses, optimizing thread pools, and reducing database query time. Measuring p95 and p99 latency helps identify real performance bottlenecks.

What causes slow REST API performance?

Slow REST APIs are commonly caused by excessive network hops, blocking I/O, undersized thread pools, inefficient serialization, chatty API design, N+1 database queries, and poorly indexed database tables.

How do I optimize database performance in REST APIs?

Database performance can be improved by eliminating N+1 queries, using proper indexing, batching queries, tuning connection pools, and reducing over-fetching. Most API latency issues originate in the data layer rather than application logic.

Should I use REST or gRPC for performance?

REST is best for public APIs and browser clients, while gRPC is better suited for internal service-to-service communication. gRPC offers lower latency and smaller payloads through HTTP/2 and Protocol Buffers, making it ideal for high-throughput systems.

How do I scale REST APIs under high load?

REST APIs scale best by combining caching, load balancing, horizontal scaling, rate limiting, asynchronous processing, and efficient database access. Observability and load testing are critical to ensure scaling strategies work in production.

References (Click to Expand)

REST API Design & Performance

- Roy Fielding, “Architectural Styles and the Design of Network-based Software Architectures,” 2000.

- Google Cloud Architecture Framework — “Designing Efficient REST APIs.”

- Microsoft Azure Architecture Center — “REST API Design Best Practices.”

- AWS API Gateway Documentation — “Optimizing API Performance.”

- NGINX, “Building High-Performance APIs and Microservices.”

Network, Protocols & Transport

- IETF RFC 7540 — “Hypertext Transfer Protocol Version 2 (HTTP/2).”

- IETF RFC 793 & RFC 8446 — TCP and TLS 1.3 Specifications.

- Cloudflare Learning Center — “How DNS Works,” “What Is HTTP/2?,” “TCP Handshake Explained.”

- Google Developers Web Fundamentals — “Optimizing Content Efficiency.”

gRPC & Protocol Buffers

- Google gRPC Documentation — “gRPC Concepts and Performance.”

- Google Protocol Buffers Documentation — “Protocol Buffers Developer Guide.”

- CNCF Blog — “When to Use gRPC vs REST.”

Server, Concurrency & Thread Pools

- Brian Goetz — “Java Concurrency in Practice,” Addison-Wesley.

- Oracle Java Documentation — “The Executor Framework.”

- Doug Lea — “Scalable IO in Java,” concurrency utilities paper.

- Microsoft .NET Concurrency Docs — thread pool tuning concepts.

Serialization & Payload Optimization

- Jackson JSON Processor Documentation.

- “Efficient JSON Processing in Java,” Baeldung.

- Google Cloud — “Optimizing Data Serialization Formats.”

Caching Strategies

- Cloudflare & Fastly Docs — “Edge Caching Best Practices.”

- AWS ElastiCache Docs — Caching Patterns Overview.

- Martin Kleppmann — “Designing Data-Intensive Applications.”

Database Query Optimization

- PostgreSQL Documentation — “EXPLAIN and Query Planning.”

- MySQL Reference Manual — “Optimizing Queries.”

- Hibernate ORM Docs — “N+1 Problem, Fetch Strategies, and Performance.”

- Amazon RDS Performance Insights — Query Monitoring Techniques.

Resilience, Rate Limiting & Distributed Systems

- NGINX Rate Limiting Documentation.

- Netflix Hystrix — Latency and Fault Tolerance.

- Resilience4j Documentation — Circuit Breaker, Bulkhead, Retry Patterns.

- Google SRE Book — “Handling Overload.”

Observability, Monitoring & Testing

- OpenTelemetry Documentation.

- Datadog APM — Monitoring API Latency and Throughput.

- k6 Load Testing Documentation.

- Gatling Performance Testing Docs.

- Grafana Mimir, Tempo, Loki Documentation.

Architecture & System Design

- Uber Engineering Blog — “Building Distributed Systems at Scale.”

- Meta Engineering — “Performance at Scale.”

- AWS Well-Architected Framework — Performance Efficiency Pillar.

- Google SRE Workbook — “Eliminating Toil and Reducing Latency.”

Did this tutorial help you?

If you found this useful, consider bookmarking Code & Candles or sharing it with a friend.

Explore more tutorials